Perceptron

The perceptron model is the earliest artificial neuron that uses a simple threshold function [McCulloch & Pitts 1943].

Sigmoid Neuron

The sigmoid model uses a continuous threshold instead (this is the same function as the logistic regression!)

Neural Networks

A neural network can be thought of as a directed graph of artificial neurons.

Typically, neurons are organized in layers. The last layer is considered the output.

Outputs might represent things like probability or likelihood an input belongs to a category, i.e. cat vs dog in image.

Neural Networks

Why are neural networks so powerful?

Roughly, the Universal Approximation Theorem states that for any function

Note that it does not say how to dinf that neural network! However, the property of existence alone suggests that neural networks can learn complex patterns and relationships.

Training Neural Networks

How do we train our neural network?

First, we must define a loss function, i.e. how far is the output from what we want? We want to minimize the loss function, so typically we try to pick functions that are continuous (has a derivative).

Then, we simply aim to pick the weights for each neuron that minimize loss. But how do we do this efficiently?

Backpropagation & Gradient Descent

Backpropagation is a method to determine the gradient of the loss function with respect to the weights of all neurons.

Once the gradient is determined, a technique called gradient descent is used to determine a potential minimum of the loss function.

Backpropagation & Gradient Descent

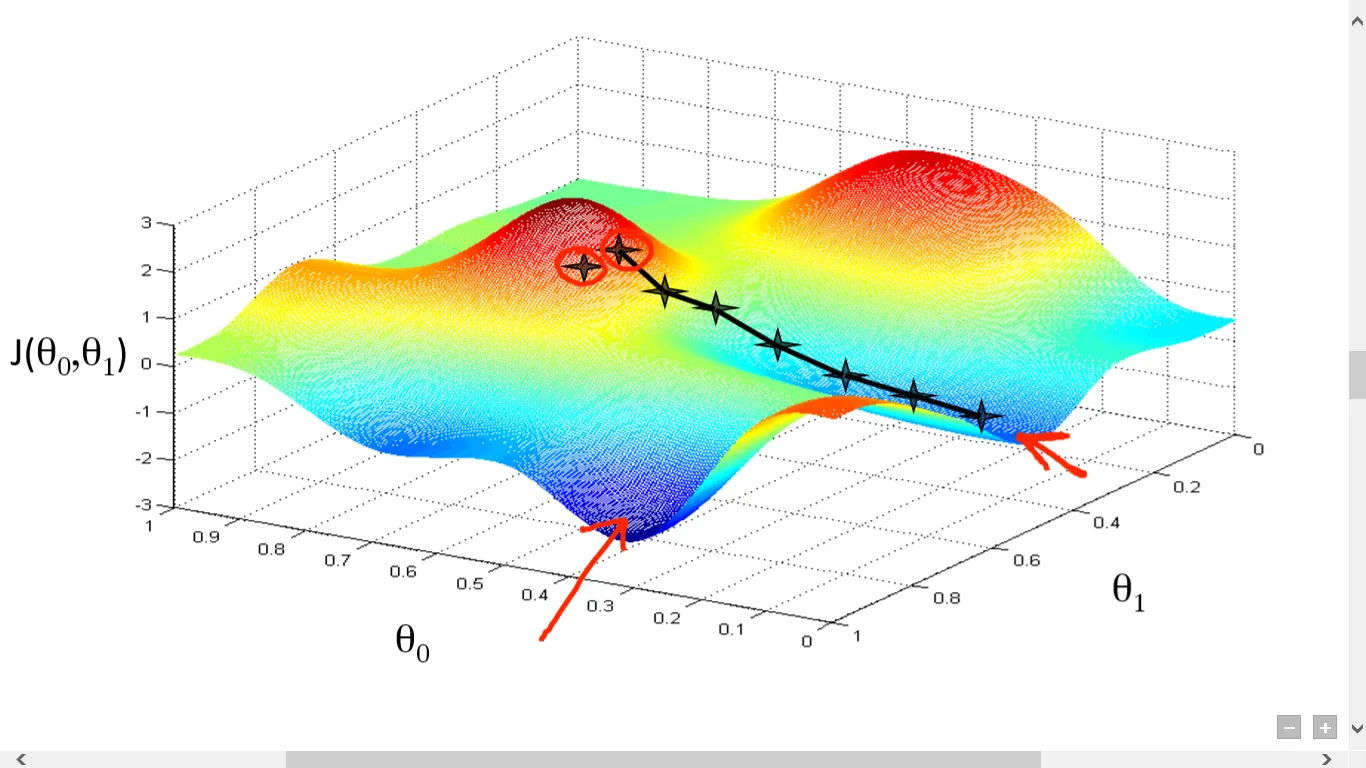

Think of rolling a ball from a random point on the loss function: eventually, it should reach some sort of "bottom". This is the key idea behind gradient descent.

Each "step" that we take tells us the direction we should adjust our weights, until we eventually reach a minimum!

Recap

To train a neural network:

- Pick a neuron model and create a network shape

- Pick random weights

- Use backpropagation and gradient descent to update weights

- Repeat until model converges

Bad news: steps 2-3 are very computationally complex steps and may even require specialized hardware (lots of matrix multiplication = need for GPUs)

Keras

Good news: you don't have to implement any of this!

Keras is an API for deep learning that plugs into various backends. Backends are codebases that have implemented the aforementioned heavy lifting for you, e.g. PyTorch, Jax, or TensorFlow.

Keras provides a coding framework to easily define your neural nets, loss functions, etc.